How to use meta-analysis in A/B testing

Meta-analysis is a powerful tool for experimenters who want to maximize insights from their A/B tests. Rather than looking at test results in isolation, meta-analysis allows teams to find patterns across multiple experiments, leading to smarter, more data-driven decisions.

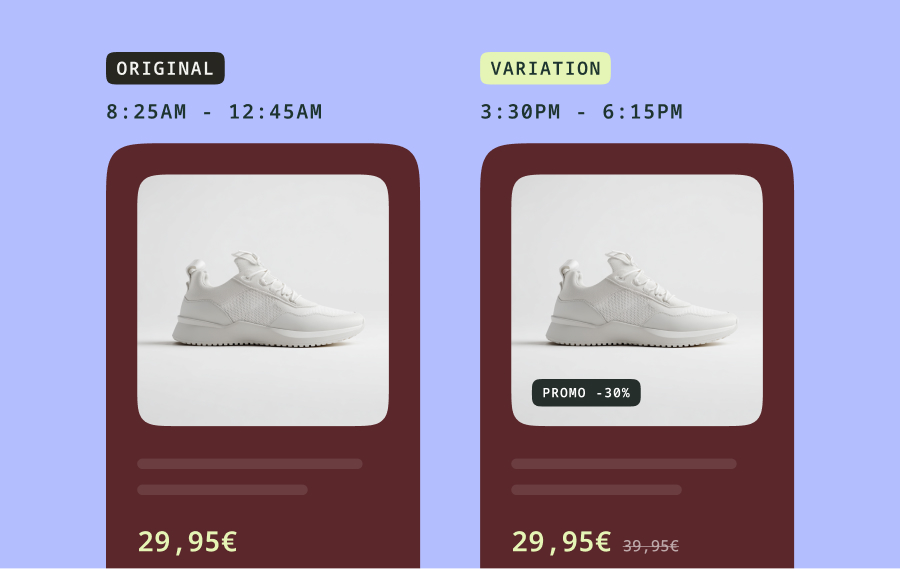

Imagine a retail brand runs 10 experiments on their checkout process over the course of a year. Each test changes a different element—like button color, form length, or shipping options—and produces mixed results: a few winners, a few losers, and several inconclusive outcomes. By conducting a meta-analysis, the team identifies a recurring pattern: every time they reduced friction in the checkout flow (e.g., fewer form fields or simplified payment options), conversion rates improved. With this insight, they form a new hypothesis that focuses on reducing friction across the entire customer journey, not just in checkout. This approach leads to more targeted and impactful tests moving forward.

As Director of Experimentation at Conversion.com, Sim Lenz has built hundreds of experimentation programs and seen them optimize their results with meta-analysis. We asked him to walk us through how to use meta-analysis in testing.

What data do I need to run a meta-analysis?

You need at least two data points to conduct a simple meta-analysis. For example, the outcome of the test, e.g., winner, loser, inconclusive, and another variable in the experiment. The second variable should be the element you want to understand better, e.g., a feature of the user experience that influences user behavior, which we call a “lever.”

You need to start by gathering accurate data from each A/B test. Here’s what to collect:

- Test outcome: winner, loser, inconclusive.

- What was tested: the specific lever or variable you changed in the experiment.

- Sample size: how many people participated in the test?

- Test duration: how long did the experiment run?

- Significance levels: did the result reach statistical confidence?

While it’s technically possible to do a meta-analysis with just two experiments, the results will be much stronger if you include data from more tests. The more you collect, the easier it is to spot patterns and draw meaningful conclusions.

Can I conduct meta-analysis on various types of data, e.g., user testing, analytics, and A/B tests?

I recommend focusing your meta-analysis on A/B tests. Structured and consistent data makes it easier to compare. To do this, you should ensure the following.

You centralize your data. Store all your experiment results in a single place, such as a spreadsheet, database, or specialized test repository tool. Having everything in one place saves time and makes it easier to spot trends.

You record metrics in a standardized manner. Use consistent definitions and formats, such as conversion rates or test durations, to guarantee you’re comparing apples to apples.

Once you have your centralized and standardized data, you can look for recurring patterns. For example, do specific changes—like headline tweaks—consistently perform better? This analysis can help you make informed decisions about future tests.

How can AI help prepare data and conduct the meta-analysis?

AI can make parts of the meta-analysis process faster and easier. Here’s how I’ve seen it work.

- Tagging data automatically: AI tools can help label experiment data, like identifying which lever or variable was tested in each experiment (e.g., Levers AI).

- Prioritizing insights: Machine learning can highlight which experiments are worth digging into based on impact or statistical confidence (e.g., ConfidenceAI).

Using AI doesn’t replace the need for human judgment, but it can take care of repetitive tasks so you can focus on making decisions. Our process involves organizing and interpreting the data manually, which we prefer to do.

When conducting meta-analysis, should I mix publicly available test data with my internal company data?

I’d avoid mixing public data with your internal data. Publicly available test results often don’t include all the raw data, which makes it hard to know if they’re reliable or comparable to your experiments. Stick to using your internal data - it’s more consistent and trustworthy.

What should I do once I’ve identified patterns in meta-analysis?

Once you’ve spotted patterns, the next step is to put those insights to use. Here’s what I’d recommend:

- Form new hypotheses. Use the patterns you’ve found to develop new ideas for experiments.

- Prioritize testing. Focus on the changes most likely to have a big impact based on your learnings.

- Share the insights. Make sure your team knows what you’ve discovered. This helps everyone align around what’s working and what to test next.

Meta-analysis isn’t just about looking back—it’s a powerful tool for planning your next steps.

--

This interview is part of Kameleoon's Expert FAQs series, where we interview leaders in data-driven CX optimization and experimentation. Sim Lenz is the Director of Experimentation at Conversion.com, a world-leading CRO agency. Conversion.com helps businesses unlock the full potential of experimentation through strategies like meta-analysis.

If you’d like to keep up with all the industry's top experimentation-related news, you can find industry leaders to follow in our Expert Directory.