How to build an A/B testing roadmap

This interview is part of Kameleoon's Expert FAQs series, where we interview leaders in data-driven CX optimization and experimentation. Haley Carpenter is a CRO thought leader from Austin. Having honed her skills for several years at top companies, she’s putting her expertise into running her own company, Chirpy.

What is an A/B testing roadmap, and why is it important to have one?

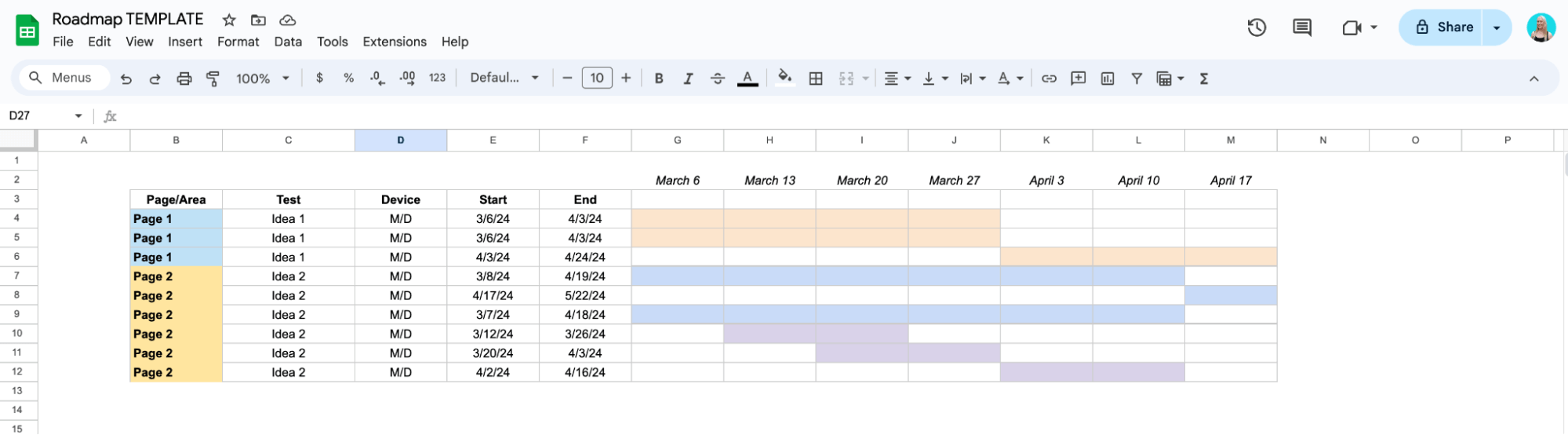

An A/B testing roadmap is a document setting out which split tests you plan to run over time. It can take many different formats, but generally, a Gantt chart format is used.

An A/B testing roadmap is critical for success in nearly any experimentation program. While lower-velocity testing programs can sometimes get away without one, roadmaps can benefit any team. They're easy to make, as they don't require any specialized tools. Sure, automations and integrated systems are nice, but they are not a necessity for A/B testing success.

An A/B testing roadmap is just that—a map. It lets you quickly see the what, who, when, where, and sometimes the how and why. It allows you to see if you’re at maximum velocity, where the holes are, and where unwanted testing overlap may occur over the next few months.

What inputs are needed to create an A/B testing roadmap?

You need the following information as a minimum to create an A/B testing roadmap;

- Page(s)/area of testing

- Test name and the change you are making to the variant, e.g., Security logos on checkout

- Device(s)

- Minimum Detectable Effect (MDE)

- Duration estimate

- Estimated start date

- Estimated end date

Once you have the above, start adding the information into a table, which you can turn into a visual view as a Gantt chart.

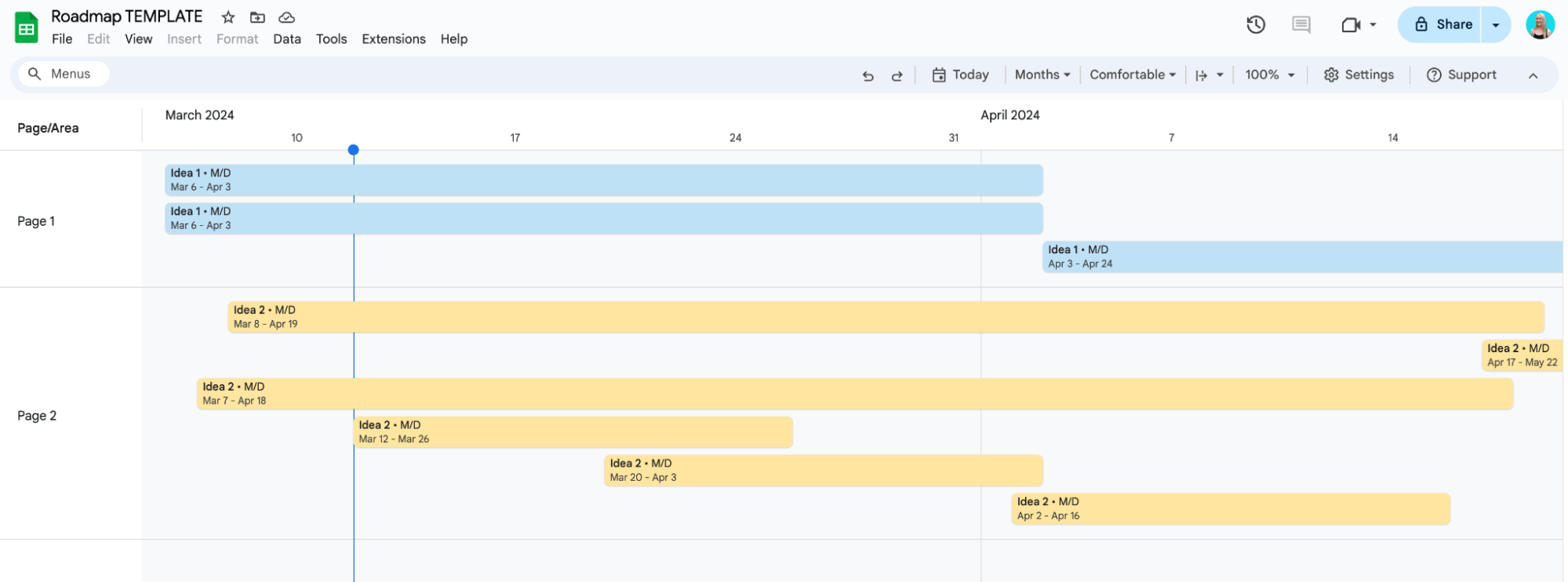

You don’t have to create your roadmap with a fancy tool. You can easily build a great version in Google Sheets, especially with their new Timeline option. I’m a huge fan of Airtable, though.

Table View of an A/B testing roadmap with the “Old” Gantt Chart view in Google Sheets

Table View of an A/B testing roadmap with “New” Timeline View in Google Sheets. Template available here for download.

How do I determine which tests to prioritize in my roadmap?

Your prioritization framework determines which split tests go into your testing roadmap. Many teams don’t have a prioritization framework, or at least an effective one, which is wild since it’s so easy to implement. I usually tell teams it’s better to have something than nothing.

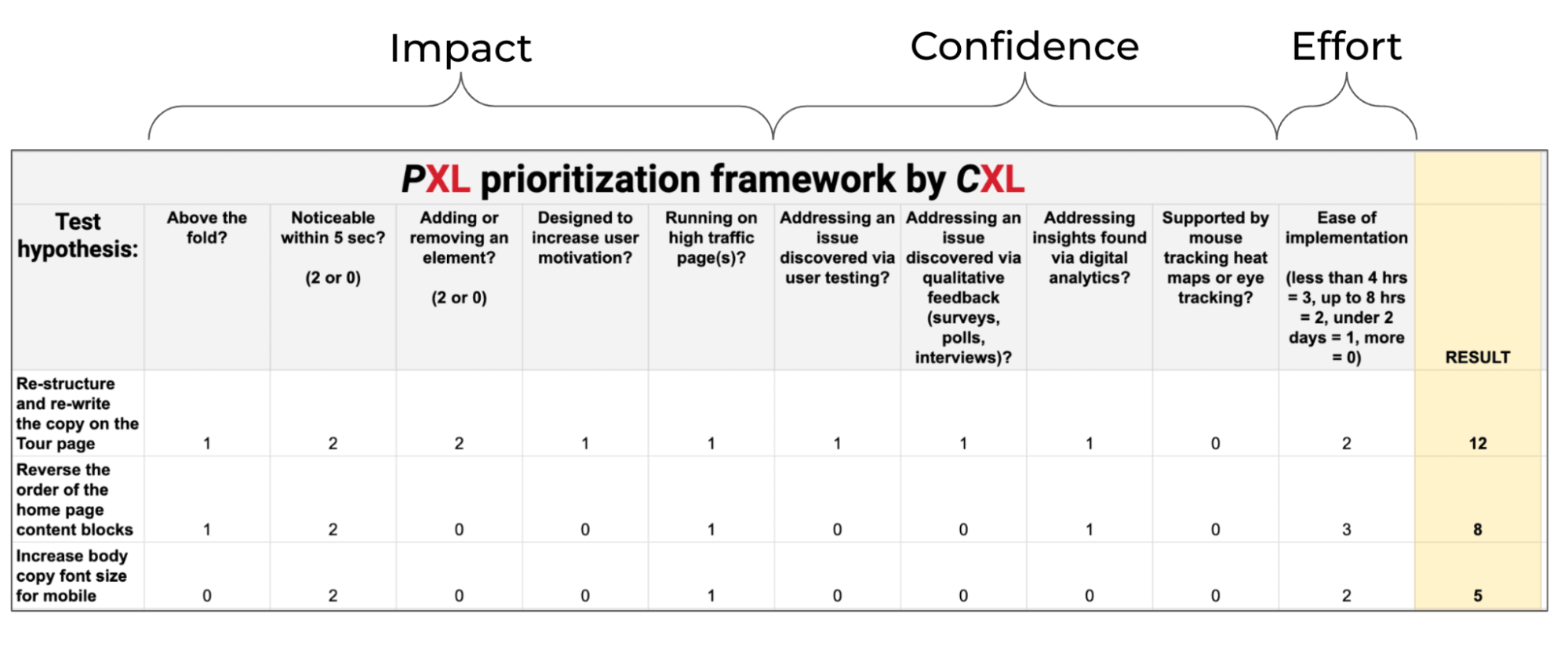

There are many prioritization frameworks available. Teams just need to choose one that makes sense for their use case and is most likely to be used in their A/B testing program. Common prioritization frameworks include PIE, ICE, and PILL.

ICE stands for impact, confidence, and effort—and these components are listed as three separate columns in a table. To use this framework, list your test ideas in individual rows. Then, go through each idea and score it according to impact, confidence, and effort (using whatever scales you like, e.g., 1-5 or 1-10). Add the scores together to get a total score for each idea. Use the “sort” function to arrange the total scores from highest to lowest. Boom, now you have a prioritized set of tests to add to your roadmap. However, what defines impact? What defines confidence? What defines effort? It’s unclear and quite subjective. You could have ten different people score a list of ideas and get many different outcomes.

ICE Prioritization framework

I prefer the PXL prioritization framework because it removes the subjectivity of ICE to a certain extent. PXL is an expanded version of the ICE framework, and its grading criteria are more defined and objective, consisting mainly of yes/no questions. However, PXL can be too robust for some teams, but I do think it’s worth the extra effort. It’s ideal for teams that have a dedicated CRO program manager.

PXL A/B test prioritization Framework. Get free access to the template here.

How can you ensure your testing roadmap doesn't conflict with other tests or activities across the business?

Test information and details on where in the experience you’re testing should either live in one place (e.g., an A/B testing roadmap) or in two integrated places. The former option is ideal and easier, in my opinion, and should be used cross-functionally. One of my favorite ways to execute this is to create my A/B testing roadmap in Airtable, a relational database with tons of functionality for slicing and dicing.

Teams that work in silos without consistent systems and processes are typically the most chaotic and ineffective. It’s not difficult to fix as long as people are willing to make changes, prioritize the effort, and be open-minded.

What artifacts and rituals should be used to maintain your testing roadmap?

A/B testing plans and test documentation should align with the roadmap, and key points of the roadmap come from pre-test calculations. Check out my video here on pre-test calculations.

Roadmaps should be updated as needed, whether daily, weekly, monthly, or quarterly. The owners of the roadmap will depend on the governance model used in your business. All primary CRO players should constantly use the testing roadmap for strategic planning. It should also be viewed as needed by others in the company when submitting test ideas or by those who are interested in test learnings to apply to their own initiatives.

Test roadmaps can also be helpful for CRO program reporting, specifically for visualizations relative to timing and velocity.

Who should own the testing roadmap? Experimentation, product, or marketing teams?

It depends on what type of CRO/testing governance model the company has, who’s involved now and in the future, and what the level of CRO knowledge is across different teams.

An additional consideration is relative to having a core group of owners, which can sometimes be gatekeepers, or allowing more people to self-serve to get more involvement. But overall, I still think roadmap ownership is company-specific and depends on your team structure, culture, testing maturity, etc. There’s no blanket answer.

Haley, you started Chirpy, an experimentation agency, last year. What convinced you to take the leap, and what sets Chirpy apart from other agencies?

From day one in CRO, I knew I wanted to work for myself. After a long history of gaining experience at top companies in the digital industry (and experiencing a lot of personal growth over the years), I wanted to spread my wings every day. I had a calling to do something more authentically me, which indicated it was finally time to go out on my own. I decided to create something different, believing it would resonate with others.

I have seen too many people have unpleasant or mediocre experiences with agencies throughout my career. It’s often due to one or more of the following reasons: communication, project management, expertise, transparency, or billing. Why does this happen so much? I believe these companies’ internal cultures are not agile, direct, humble, or knowledgeable enough to recognize and address the root problem. However, as a client, you would never know this until it is too late because of their knack for making big promises, many of which are never fulfilled.

Furthermore, this industry cultivates company cultures that aren’t beneficial to clients, even if a company looks like they’re different on the outside. Many people are promoted based on tenure, not merit. If it’s a toxic culture, then gender and other demographics are also factors. The barriers to entry and bars for success are extremely low. Consequences for underperforming are often low.

I was tired of the shenanigans, chasing job titles, having my destiny semi-controlled by others, and being told what to do by people who shouldn’t be dictating anything. My dream was to be an entrepreneur.

Now, I’m hashing out whatever it takes to run a top-notch business with a no-bullsh*t attitude, inspired by my personality and working with Nordic teammates.

If you pick Chirpy, I’ll get you to where you want to go and sprinkle in some fun while we do it. We run at a velocity that most clients haven’t experienced before, and you won’t get any plug-and-play methodologies or junior-level account teams when you’ve been promised seasoned experts. At Chirpy, we elevate a business’s decision strategy, expose them to less risk, and drive performance through the roof.