Warning! Is the world sabotaging your A/B Tests?

A/B Testing is great, but if we're being honest, it’s pretty easy to screw up. You have to create a rigorous process, learn how to formulate hypothesis, analyze data, know when it’s safe to stop a test or not, understand a bit of statistics … And… Did you know, that even if you do all of the above perfectly, you might still get imaginary results? Did you raise an eyebrow? Are you slightly worried? Good.

Let’s look into it together. What (or who) in the world is screwing up your A/B Tests when you execute them correctly? The World is. Well, actually what are called external validity threats. And you can’t ignore them. Your A/B Tests results might be flawed if:

- You don’t send all tests data to your analytics tool

- You don’t make sure your sample is representative of your overall traffic

- You run too many tests at the same time

- You don’t know about the Flicker Effect

- You run tests for too long

- You don’t take real-world events into account

- You don’t check browser/device compatibility

You don’t send all tests data to your Analytics tool

Always have a secondary way to measure and compare your KPI’s.

No tool is perfect, and humans are even less likely to be. Implementation issues with your A/B Testing tool are veeery common. Your goals and metrics might not be recording properly, your targeted sample could be badly set up. Just to mention a couple of examples. If you see any discrepancies in your data, or if a metric is not tracked correctly, stop everything. Check your goals, metrics, scripts and start over. Same thing at the end of a test, make sure to double-check your results with your analytics tool before doing anything. Cross-check your data like your business depends on it (and it does). It would be a shame to do everything right and end up with flawed results because your A/B Testing tool was set up wrong or didn’t work properly.

You don’t make sure your sample is representative of your overall traffic

You can’t measure your “true” conversion rate because it’s an ever-moving target, new people come and go every day.

When you do A/B Testing, you take a sample of your overall traffic and expose these selected visitors to your experiment. You then assimilate the results as representative of your overall traffic and the conversion rate measured sufficiently close to what would the “true” value be. This is why you should have traffic from all sources. New and returning visitors, social, mobile, email, etc in your sample, mirroring what you currently have in your regular traffic. Unless you’re targeting a specific source of traffic or segment for your A/B test of course. But if that’s not the case, it’s important you make sure you or your marketing team don’t have any PPC campaigns, Newsletters, other campaigns or anything that could bring unusual traffic to your website during the experiment, thus skewing the results.

People coming to your website through PPC campaigns will generally have a conversion rate lower than regular visitors. Whereas returning visitors—or worse, your subscribers, will be much more likely to convert. Because they already know you, trust you and maybe love you (I hope you have some that do love you. As Paul Graham, Co-founder of Y Combinator said: “It's better to have 100 people that love you than a million people that just sort of like you”). One other thing to keep in mind is: if your competitors are running some kind of big campaign, it could impact your traffic as well. So pay close attention to your sample, make sure it’s not polluted in any way.

You run too many tests at the same time

You’re maybe familiar with Sean Ellis’ High Tempo Testing framework. Or you just have the traffic necessary to run several tests at the same time (well done you!).

BUT—by doing that, you’re increasing the difficulty of the whole process. It takes time to gather data, measure, analyze and learn. So if you run 20 tests in parallel, I hope you have an army at your command. Oh, and that all your tests actually increase conversions. Let’s not forget each test has a chance to DECREASE conversions. Yup. (*shudder*) You might also be messing up your traffic distribution. My what now?

Let’s take an example.

Say you want to test steps 1, 2 and 4 of your check out process. You send 50% of your traffic to your control step 1, and 50% to your variation. So far so good. Except you’re also testing step 2. Meaning that if you don’t re-split evenly (and randomly) people coming out of both your control and variation from step 1 so you have an equal amount of visitors exposed to your experiment, your step 2 experiment will be flawed. And I don’t even talk about the step 4 one.

You absolutely can do multiple tests in parallel, but pay attention to traffic distribution, the influence tests could have on each other and that you can indeed handle all these tests properly. You’re testing to learn, so don’t test for the sake of testing. Take full advantage of the learnings.

You don’t know about the Flicker effect

The Flicker effect (sounds like a movie title right?) is when people catch a glimpse of your control while the variation is loading.

It happens when you use a client-side tool because of the time needed for the JavaScript engine to process the page. It shouldn’t be noticeable to the naked eye (i.e. less than 0,0001 second), though. You don’t want people seeing both versions and wondering what the hell is going on. If it is noticeable, here are possible reasons:

- Your website is slow to load (it’s bad both for UX and SEO by the way)

- Too many scripts load before your A/B Testing tool’s

- Something in your test is in conflict with / disables the tool’s script

- You didn’t put the script in the "" of your page

Optimize for the above reasons, or do split URL testing. Just make sure your control is not noticeable to the human eye. If you want to know more about the Flicker Effect and how to fix it, check out this article.

You run tests for too long

“Don’t stop too early, don’t test for too long” … Give me a break, right? Why testing for too long is a problem? Cookies. No, not the chocolate ones (those are never a problem, hmm cookies). Cookies are small pieces of data sent by the websites you visit and stored in your browser. It allows websites to track what you’re doing as well as other navigational information.

A/B Testing tools use cookies to track the experiments. Cookies have a validity period (at Kameleoon the duration is customizable but they last 30 days by default). In our case, if you let a test run for more than 30 days with the default validity period, you’ll have people exposed to your test several times. When the cookies expire, your tool can’t distinguish if the visitor was already exposed to the experiment or not, thus polluting the data. Considering that people sometimes delete cookies on their own too, you’ll always have a degree of pollution in your data. You’ll have to live with that.

But if you let your test run for too long, your cookies will expire. That’s a real problem. You could also get penalized by Google if you run a test longer than they expect a classic experiment to last. So make sure the validity period of your cookies is coherent with the length of your test beforehand. And if you must extend (always by minimum a full week remember!) a test because you’re not satisfied with your data, don’t forget about them cookies.

You don’t take real-world events into account

We already talked about how conversion rates vary between week days. It’s actually just one case among many other things, outside of your control, that can influence conversion rates and traffic.

Here are a couple to consider:

- Holidays: Depending on the nature of your business, you could either have a traffic spike or drop, people having a sense of urgency and converting faster or the opposite.

- Pay day: Yup, it can be a factor in some cases. I mean, you can probably relate (I know I can), when it’s pay day, you’re way more likely to buy certain type of products and make impulse buys.

- Major news: Could be a scandal, assassination, plane crash, and it very well might be impacting your test. People can get scared into buying things out of the blue, or if the news is sufficiently important they could be distracted and convert less.

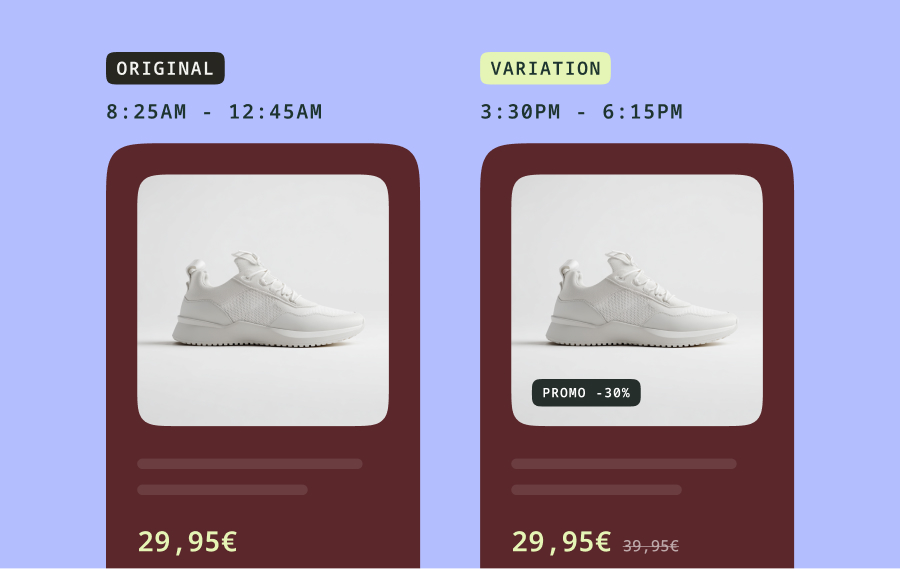

- Time of day: Depending if you’re B2B, or B2C, people won’t visit and buy at the same times. B2B will usually have more conversions during business hours, B2C outside business hours.

- Weather: First the obvious one: if a snow storm is coming and you sell parkas, you’re going to sell more as soon as it’s announced. But that’s not all. Weather influences how we buy. It can be verified throughout History, and through more recent studies like this one by richrelevance that found for example a difference of 10–12% orders in cloudy vs sunny days for their Clothing, Home/Furniture, and Wholesale retailers.

You can minimize the effects by keeping track of the news, looking at your annual data to look for unusual spikes in traffic, and see if they could be caused by external events.

You don’t test for browser / device compatibility

This one is a make or break. Your test should work across all browsers & devices.

If variations don’t work or display properly depending on device and browser, you’ll end up with false results (as malfunctions will be counted as losses). Not to mention awful user experience.

Be particularly careful with this particularly if you use your A/B testing tool visual editor to make changes more complicated than changing colors or copy (we advise you avoid doing that, and code it yourself for this types of changes). The generated code is usually ugly and could be messing things up.

- Test your code on all browsers and devices. Chrome inspecting tool is usually good enough to simulate all devices.

- Don’t use browser-specific CSS or Ajax.

- Be careful with too recent CSS (check in your analytics tool what browsers your audience is using).

That’s all folks! For today that is. We'll continue our series on A/B Testing Mistakes next time with an article on ways you could be misinterpreting your A/B tests results.

OR, if you REFUSE to wait, and want everything NOW, you can download our Ebook with all the content of our series HERE :)

PS: Before you go, a couple of things I’d like you to do:

- If this article was helpful in any way, please let met know. Either leave a comment, or hit me up on Twitter @kameleoonrocks.

- If you’d like me to cover a topic in particular or have a question, same thing: reach out.

It’s extremely important for me to know that I write content both helpful and focused on what matters to you. I know it sounds cheesy and fake, but I feel like writing purely marketing, “empty” stuff—just for the exercise, is a fat loss of time for everyone. Useless for you, and extremely un-enjoyable for me to write. So let’s work together!

PS2: If you missed the 2 first articles in our series, here they are: