How can I use AI & machine learning for better Research, CRO, A/B testing, and Automation?

This interview is part of Kameleoon's Expert FAQs series, where we interview leaders in data-driven CX optimization and experimentation. Craig Sullivan runs his own consultancy firm, "Optimise Or Die," and is the winner of a “Legend of Experimentation” award as well as a "Legend of Analytics" from Measurecamp, both for contributions to the data and testing community. Craig started out in UX, embraced analytics data, and then combined these disciplines with A/B testing in 2004. For the last 10 years, he's been helping companies scale and optimize their experimentation efforts to free the latent value inside their products, helping teams increase value and learning from testing.

How could I use AI and Machine Learning (ML) tools to identify opportunities throughout the customer journey?

There are a lot of examples out there to showcase the use of AI and ML. They are embedded in a heap of products, and while the world has mainly concentrated on AI vendors, the big movement has been to make it easier to build AI integrations for products.

The DevOps tools are now there to glue data, processes, workflows, tools, and Large Language Models (LLM) together in production—along with input streams of customer data, text feedback, chat window interactions, etc. AI is becoming more distributed and integrated with products and toolkits you already know and use. Your Session Replay vendor is probably already using machine learning and AI, your Analytics Tools (like GA4) are already using it, and you should expect to see more integrations or tool-specific AI features appearing in a range of qualitative, quantitative, and discovery tools.

Session replay, analytics data, predictive analytics, personalization, chatbots, customer support, text and feedback summarization, CRO, SEO, competitor analysis, review mining, copy research, and automating the coding or analysis of A/B tests—all these areas that will be accelerated with AI. The question might eventually be, “Where are we NOT using ML and AI?”

To what extent can AI & machine learning help me find conversion problems and work out the underlying causes?

There are lots of areas where AI & machine learning tools can help, such as:

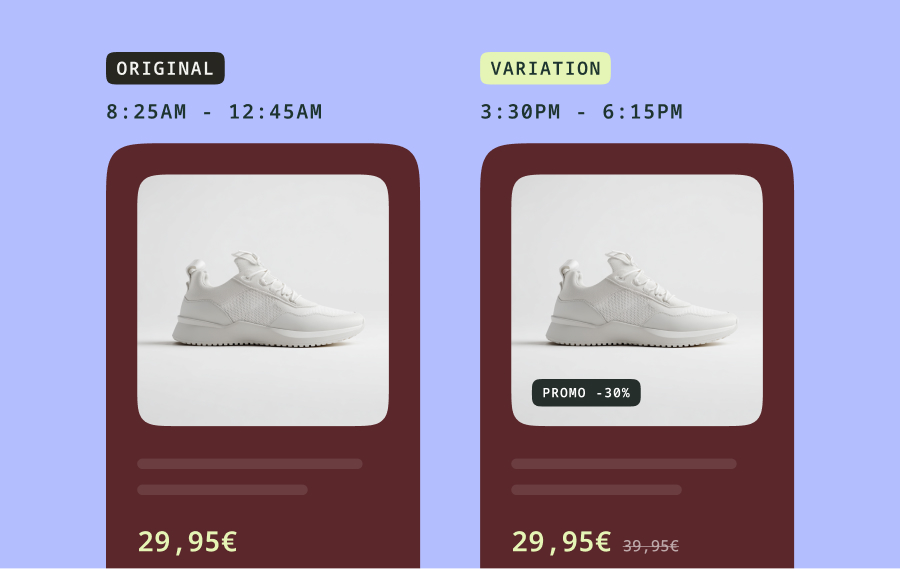

- Predictive analytics and propensity scoring (e.g.,Kameleoon Conversion Score.)

- Anomaly or error detection.

- Performance optimization.

- Finding problems in products automatically through data, session replay, and interaction tracking.

- Basic auditing or checking of websites, journeys, and flows.

In terms of AI automated checks on fields, forms, interactions, devices, screens, and copy—it's not smart, but it can do some of the dull work involved in device and accessibility audits or testing a flow, journey, or A/B test setup.

In terms of identifying bugs, defects, or UX problems, session replay tools are getting much better at mining valuable insights. My hunch is that searching and filtering will also become much easier once these tools add contextual interpretation to search.

Multimodal AI offers the possibility for a single LLM to handle a mass of inputs like videos, images, text, and other data. This enables AI products to parse, browse, and understand websites or watch session replay videos of people using your checkout and find problems.

If customers are providing product reviews, you can mine them using AI. You can understand and cluster common problems, quantify them, and prioritize them. Creating a problem statement and hypothesis around the most important issues you discover and then ranking them will help you make experiments that solve real issues you've identified using AI.

How can AI and machine learning help me set up and run qualitative research to inform hypotheses?

I don't think AI is at the stage yet where it can help with anything but the boring parts of qualitative research. It certainly can’t replace the need for customers as our source of truth. In my opinion, AI cannot (yet) set up and run qualitative research. You could, for example, use AI to coordinate and consolidate a range of customer “bot” interviews that you design, but this is very different from AI setting up and running the research.

With some understandable limitations, AI can still be very useful for general research and discovery work. Think of it as an enthusiastic but inexperienced intern. It may have good ideas or remind you of important things, but it's not making the decisions, you are.

—Craig Sullivan

CEO of Optimise or Die

Iqbal Ali and I have been using AI to summarize and mine insights from reviews, chats, and competitor websites. This is an interesting piece of research in itself. Not only can we figure out the exact problems (or groups of problems) that people have with a product, but we can also ask interactive and iterative questions directly about that dataset. We can compare the problem and solution space across a range of competitors. We can then understand the important issues by getting AI to summarize them. This clustering usually surfaces customer problems we had no idea existed or that give us transformational ideas for experiments—things that will fundamentally change the product or business.

AI can help you handle lots of varied types of feedback that you’d never have time to read, turning it into useful and quantifiable insights within seconds. In terms of discovery and research work, AI can help with summarizing videos, conversations, text inputs, copy research, competitive evaluations, and more. Here’s a resource pack of further reading with great examples, prompts, and approaches.

In what ways could teams use AI & machine learning tools to help prioritize which hypotheses to test first?

I've seen several powerful ways that AI can help teams with prioritization, prediction, and parsing:

- Prioritization: I think that AI can really help automate and score the prioritization of test ideas. Whatever scheme you use, AI can collect the data required to help rank an experiment backlog. You can also score tests around how well they solve particular customer problems that you’ve identified through mining feedback text.

- Prediction: This is where you use a repository of A/B test results to develop a “prediction score” or “likelihood to have a significant and positive result” based on the metadata stored about the experiments. I know that vendors and some larger companies are now collecting A/B testing metadata to run training models for predicting future test success. My hunch is that the quality of the scoring will depend heavily on the quality of the test metadata, some of which will have to be automatically generated. Multimodal AI can start to understand what tests are doing.

- Parsing: You can use AI to do the analysis work and identify opportunities. This article has slides and video showing how you can use GA4 with AI to optimize content (SEO). The video shows you how AI isn't making the decisions; it's just helping you do this work faster and smarter. GA4 provides the data, but you can power some smart analysis and opportunity identification using AI on top.

There are endless permutations when it comes to designing variants for each hypothesis. How can AI & machine learning help create the best test variant?

If you have that many variants, you may have too many ideas and no clear hypothesis. In this case, you should use this Hypothesis Kit to frame your hypothesis.

Alternatively, you’ll need a multi-armed bandit algorithm to test several competing ideas where you have no clear preference.

I think that the most important thinking around experimentation happens at the stage where you are writing and defining your hypothesis and how you’ll design the experiment—working out what metrics you will use, what type of test you will run, and what you expect to learn from running the test. This design activity requires that the inputs (the data, research, insights, range of inspection, and discovery methods you have) are all good quality. Otherwise, it’s garbage in, garbage out.

The best way to narrow down a large number of test possibilities is to concentrate on identifying, understanding, and then focusing on solving customer or business problems—the stuff that drives value.

AI can also be used in test variants. Let’s consider an example of a business problem for a furniture store. Product artwork is expensive. It requires photographers to take photos of the furniture in a mocked-up room. So, you run an A/B test to remove this “lifestyle” imagery on product pages to measure the impact. You find it harms conversion, but now you can weigh up the cost vs. benefit argument for photography. After discussing the “value” the photos provide, your team runs two follow-up tests. One using imagery ONLY on the top 250 products, and the second experiment using AI-generated product artwork instead of photos. After we run the second test, we will have the information to make an informed decision.

Alongside your “Legend of Experimentation” title, you’re famous for your after-conference DJ sets. Can you tell us what disco edits are and share some of your favorites?

DJ edits and remixes of music tracks have been around for ages, but the Disco Edits movement is specifically about taking classic old disco records and tweaking them into bangers that are great to dance to. I’ve learned that old-school stuff mixed with newer beats provides recognizable hooks for everyone. Only some people know the originals, but everybody enjoys dancing to the new mix. If you’re looking for a history of Disco Edits, give this a read.

Here’s my own disco edits playlist, along with a list of my favorites;

- You make me feel (mighty real): refunked and remixed.

- A Love Like Mine: an old seventies gem, housed up.

- Rasputin - modern remix by Majestic.

- Can’t take my eyes off you.

- Paroles Paroles: a lovely French mix.

- A fifth of Beethoven: Funky piano rework.