6 Practical tips for getting started with mobile app testing

While you may have mastered the art of A/B testing on web experiences, mobile app testing presents new challenges. Screen size limitations, user behavior nuances, and platform-specific conditions demand a distinct approach. This article bridges the gap, leveraging your existing A/B testing expertise to unlock the secrets of mobile app testing. We'll delve into the key differences between web and mobile app testing and equip you with practical tips from seven mobile experimentation leaders.

But before we dive into the ‘how,’ it’s good to understand why mobile app testing is useful.

Why is mobile app testing useful?

Jorden Lentze, Senior Product Manager Apps at Booking.com, succinctly summarizes four core reasons to embrace mobile A/B testing:

- Follow your customers: If your mobile app has a large number of users (or high-value customers), you should dedicate time and resources to optimizing that experience.

- Risk mitigation: Making changes to mobile apps takes more time and resources than web experiences. Mobile app tests help you evaluate changes to ensure they will have a positive impact before they are fully implemented.

- Loss prevention: Identify any negative impacts from changes and avoid releasing bugs or bad UX into the app experience.

- Customer centricity: Continuously improve the experience and add value for your customers.

The nuances of mobile app testing

While most of the core principles of A/B testing are consistent across web and mobile, the technical constraints of mobile apps necessitate different testing methods. For web-based experiences, tests can be deployed instantly. But on mobile, you're beholden to your development teams’ app release cycle, the app store approval process, and users updating their apps.

Furthermore, website experimentation platforms typically include WYSIWYG editors and client-side technologies that empower non-technical teams to craft tests without developer support. But mobile mobile app testing at Booking.comapp tests demand closer collaboration and, depending on your requirements, dedicated iOS and Android developers.

It’s also important to remember that your users might transition between your app and website, so you’ll need to maintain a cohesive customer journey by working and aligning closely with other teams and considering designing tests that are cross-device.

While deploying mobile app tests might be more challenging than web tests, mobile apps typically boast more logged-in users. This translates to a more holistic view of user interactions and behavior across platforms, providing a richer tapestry of data and more reliable test insights.

The other plus for mobile app testing is that while you might not have high traffic, apps generally boast higher engagement and conversion rates than websites, meaning it’s possible to achieve statistically significant results with less traffic.

Using feature flags to get started with mobile app testing

Rather than using A/B testing right away, you should consider using feature flags for mobile app tests.

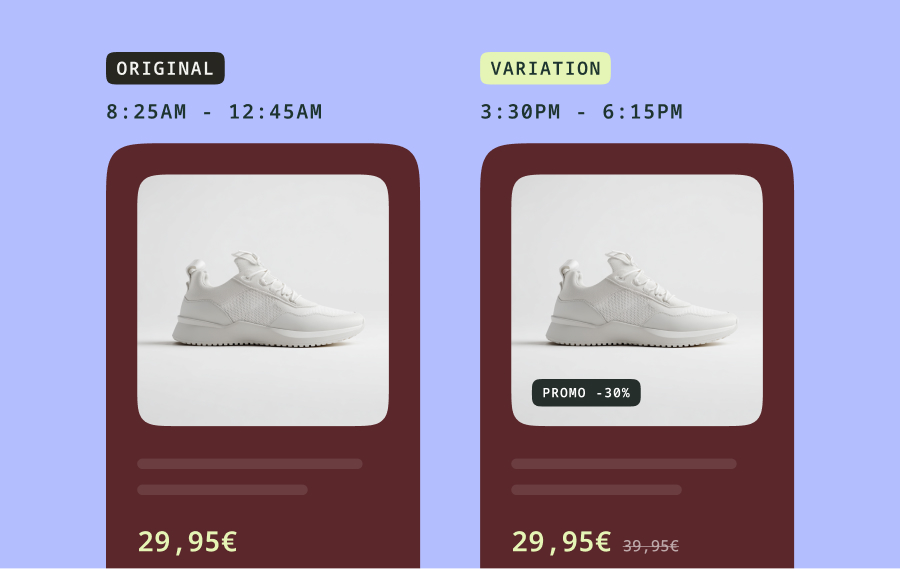

Start deploying a change to a small set of users via feature flags. If the change you made related to the feature flag doesn't meet expectations, you can just turn it off instantly—giving you access to a kill switch that isn’t dependent on the app release cycle.

If you see positive results, you can progressively roll out the change to more users while measuring the impact on your key metrics and performance. You'll be able to show the value of this approach to your managers and then make the final step towards running the change as a full A/B test.

It’s worth remembering here that not all ideas need testing. A/B tests should inform decisions such as adding a feature or changing an experience but aren’t required for bug fixes or UX improvements generally.

To achieve the feature flag > progressive rollout > experimentation workflow, you’ll need an intuitive and easy-to-use testing tool that supports feature flags. Some tools will streamline the implementation of experiments better than others, with no additional development required, making it easy for non-technical users to self-serve. Ideally, rather than asking your developers to code an experiment, you’ll ask them to code the feature; then, the Product Manager can create the experiment using feature flags and test variants of that feature.

Six practical tips for mobile app testing

In addition to the different technical approaches and methods used to conduct mobile app tests, there are also process-related tips that are valuable to know. Here are six of the best pieces of advice from seven industry leaders working in mobile app testing.

1. Start Small and avoild the snowflake effect

No matter how mature your website-based experimentation program is, it’s wise to start your mobile app testing journey at a beginner level and then ramp things up toward more complex experiments. As Hannah suggests,

Choose one specific element to test at a time —whether it’s a headline, button color, or feature placement. This definition ensures you can directly attribute any changes in user behavior to that specific variation.

—Hannah Parvaz

Founder at Aperture

William also recommends a lean mindset when it comes to creating tests. Perfection is the enemy of experimentation, as he explains,

You should take a lean approach and start with a Minimum Viable Test (MVT) when experimenting. This means you shouldn’t overthink your A/B test: the sooner you get your test out, the sooner data will come in, which will help you iterate on the test. Moreover, PMs and marketers often fall into what I call the Snowflake effect, where they assume their users will be upset and bounce if the experience is not perfect. In the hundreds of tests I’ve run, only once or twice did this happen.

—William Klein

Product Growth at WeWard

But what does a simple Minimum Viable Test (MVT) look like in reality? Jorden Lentze, Senior Product Manager Apps at Booking.com, suggests you could start by removing something from your experience rather than creating something new. To achieve this, you would need to put the element in question behind a feature flag and then deactivate it for a group of users. It’s easy to get your first mobile app experiment into production.

2. Track the right metrics

The whole point of experimentation is to gather data to inform decisions, so you have to ensure you’re tracking the right metrics and have access to the necessary data before you start your first mobile app test. It’s also crucial to define what success looks like before you begin to avoid bias analysis; as Hannah explains,

It’s crucial to define your success metrics beforehand, whether that’s an increase in app engagement, higher conversion rates, or another relevant metric. This way, you can objectively assess the impact of your test.

—Hannah Parvaz

Founder at Aperture

Umashankar expands on this,

Focus on defining clear, measurable objectives for your A/B test and ensure they align with your app's core user experience and business goals. Prioritize simplicity in your test design to isolate variables effectively, making it easier to interpret results.

—Umashankar Shivanand

Head of Product at SleepScore Labs

But should your change be a test at all? To know whether your test design is fit for purpose, ask yourself the following,

How will you measure success, and what will the hypothetical results mean? If you cannot determine what to do with the results, your test needs reviewing. Try to resist peaking in the results before they reach significance to avoid making false conclusions.

—Léa Samrani

Product & Growth Consultant

Using an experimentation tool that enables you to get a single unified view of the user can help a lot when assessing the impact of an experiment and enable you to use historical data for targeting purposes. This is useful in scenarios such as the one Léa mentions;

Always test on new users when you can. Existing users will need time to adjust to a new experience, which will impact the duration of your testing.

—Léa Samrani

Product & Growth Consultant

To get this unified view, you must use a testing tool that consistently buckets users. It ensures that users receive a uniform experience across sessions and devices. If you have logged-in users, you should also ensure that the feature flagging platform can show them the same variant, whatever devices they use.

For mobile app testing at Booking.com, they don’t use cookies but measure mobile app users based on their device ID, giving them more reliable data. This unified user view helps you understand the real impact of your tests:

You need to understand the impact of your test across the funnel. To do this, make sure you've identified not only the primary metric but also your secondary/health metrics. For example, if you're trying to impact Average Revenue per User via a paywall test, you could also look at D1 (the number of unique users who come back to your app on the next day of installing/opening the app.) user retention.

—Sylvain Gauchet

Director of Revenue Strategy, US at Babbel

3. Collect qualitative and quantitative data within your testing process

A/B and analytics data are great but do not represent the whole picture. Often, qualitative data fails to uncover the why behind user behavior. Umashankar recommends the following,

Engage with users directly to incorporate their feedback into your A/B testing cycle. Set up mechanisms to gather qualitative feedback, such as surveys or user interviews, alongside your quantitative A/B test data. This dual approach allows you to understand not just the 'what' behind user behaviors but also the 'why,' ensuring that your optimizations are deeply informed by user needs and preferences.

—Umashankar Shivanand

Head of Product at SleepScore Labs

Experiments, whether for mobile or web, that are based on signals across multiple data sources help reduce bias and enhance the validity of your hypothesis. So, collecting this type of quantitative data is worth the extra effort.

4. Prioritize mobile app tests that matter to your customers

Just as you would for web experimentation, you must prioritize what tests you’ll run. Inae provides a valuable way of identifying what tests are most important.

To help you define what customers want, examine your product-market fit findings and use this to prioritize the most important features to test with a hypothesis. Otherwise, you will end up running short-sighted tests such as button color tests. Be ready to adapt and try again based on what you learn, as you won't always get the result you were hoping for.

—Inae Lee

Growth Product Manager at Capital One

In addition to this, you can also use the same prioritization frameworks used for web-based experiments, such as PXL, PIE, or ICE. Once tests are proposed across experiences, you can add them to your A/B testing roadmap to help coordinate efforts and ensure there are no conflicting experiments.

5. Remember not all mobile experiences are created equal

The key to mobile app testing is to recognize the unique aspects of each platform. When you see differences in test results between iOS and Android, they could be due to different audiences/behavior, but more often than not, there’s a more straightforward explanation;

When I see differences in test results across platforms, it's often an implementation issue. Something is a little bit different in the implementation, and this is the reason why you're not seeing the same effect. Or it could be design differences—there's an inherent design difference between iOS and Android that might change the behavior.

—Jorden Lentze

Senior Product Manager Apps at Booking.com

Check the test implementation difference before trying to understand if it’s due to the slight audience difference across platforms.

6. Parity across mobile apps isn’t the goal

Many businesses, if they have native mobile apps, will create iOS and Android versions that are effectively standalone experiences. The innate feeling is that these apps should mirror each other so all users get the same experience, but Jorden has some advice on this,

Unless it's price or a key feature, parity is not the goal. The goal is to create an experience where every part of the experience adds value. Interestingly, we had room filters on Android but couldn't get them to work on iOS. We've been trying for a long time to get parity. But if it's not adding value, why would you implement it? It can be important if it's part of your brand design or if it's a specific feature that is cross-platform, but I think it's always good to make sure that you're actually adding value. Otherwise, you have maintenance costs, and you're adding friction for customers.

—Jorden Lentze

Senior Product Manager Apps at Booking.com

6 Practical tips for getting started with mobile app testing

- Start small: Begin with simple A/B tests, focusing on one element at a time. This helps isolate the impact of changes.

- Track the right metrics: Define clear success metrics upfront to objectively assess your tests' results.

- Collect qualitative and quantitative data: Supplement A/B test data with user feedback to understand the "why" behind user behavior and ensure user-centric optimizations.

- Prioritize mobile app tests based on customer needs: Focus on testing elements most valuable to your customers by prioritizing features aligned with user needs.

- Remember, not all mobile experiences are created equal. While iOS and Android users might behave differently, most often, differences in results between the two are due to implementation discrepancies.

- Parity across mobile apps isn't the goal: Don't chase parity across platforms; prioritize value creation over identical user experiences.

Thanks to all of the contributors:

- Sylvain Gauchet, Director of Revenue Strategy, US at Babbel

- Umashankar Shivanand, Head of Product at SleepScore Labs

- Inae Lee, Growth Product Manager at Capital One

- Léa Samrani, Product & Growth Consultant

- William Klein, Product Growth at WeWard

- Hannah Parvaz, Founder at Aperture

- Jorden Lentze, Senior Product Manager Apps at Booking.com

If you'd like to learn more about mobile app testing, check out this webinar recording, in which Jorden Lentze, Senior Product Manager, shares real-world examples of his work from Booking.com.