How can I validate future engineering work with experimentation?

This interview is part of Kameleoon's Expert FAQs series, where we interview leaders in data-driven CX optimization and experimentation. Eddie Aguilar is the founder of Blazing Growth, where he architects intuitive experiences using data, privacy, and technology to improve business, revenue, and retention.

Eddie has been building and leading engineering experimentation programs for 12 years and has been a programmer for over 15. He has worked with companies like the Bill & Melinda Gates Foundation, Google, Petco, Seagate, Box, HPE, VMware, Pokemon, NFL, Ellevest, ExtraHop, and more.

How can I use experiment data to prioritize the engineering backlog?

Several factors impact priority of the engineering backlog: time, money, resources, KPIs, engineering processes, and competitor activity.

These factors don’t always balance out or point you in a single direction, so how do you determine what’s top priority? With experiment data, you can use customer requests from sales or support channels to prioritize what is tested.

Once these are validated through experimentation, they should move to the front of the engineering queue. Items not validated by experimentation can be user-tested via tools like UXTesting or WEVO, thrown out, or revisited later.

After years of working between engineering, product, and marketing teams, I realized the current prioritization models don’t help me accomplish business needs but rather prioritize website elements or website fixes.

Instead, I now consider three critical variables to help prioritize engineering work: Data, Ideas, and Decisions (D.I.D).

Here is what goes into each component of D.I.D.:

- Data: What data do you have from experiments or user research? Include data from all parts of your business, like sales, support, and AI assistants. We want to ensure every task or hypothesis is backed by as much data as possible. Consider the strength of your "priors" and evaluate how strong the data is.

- Ideas: How feasible is the idea or hypothesis you’re testing? What’s the amount of engineering and marketing effort needed to support the hypothesis? Evaluate your current tech stack, or plans for future tooling, to ensure they can support your experiment. Check if the necessary KPIs exist and if your user behavior analysis tool can capture the experiment. Then determine if you need legal, engineering, or branding resources to execute the test.

- Decisions: What decisions do you need to make after your experiment is completed? So many times, I’ve seen companies run experiments and do nothing with them afterward. Ask yourself whether this is an actual feature you want to implement? Or is this feature more of an insightful takeaway for future developments?

With the above information, you can then determine what work needs to be built and shipped as a priority and what needs more data to help you decide. In this sense, D.I.D. helps you know whether a test met requirements, was backed by enough contextual data, and had engineers assigned. But, most importantly, this formula helps you understand whether a test was a waste of time.

There’s a book called Algorithms to Live By which says the "Shortest Processing Time" algorithm requires the completion of quicker tasks first. To do this, divide the importance of the task by how long it will take. Only prioritize a task that takes two times as long if it is two times as important. This ties into ‘cost of delay’-type calculations.

Then there’s the Copernican Principle which allows you to predict how long something will last without knowing much of anything about it. The solution is that something will go on as long as it has gone on so far. This principle can be applied to tasks like determining customer lifetime value, or how long someone will be a customer.

Why should I bother validating engineering work via experiments?

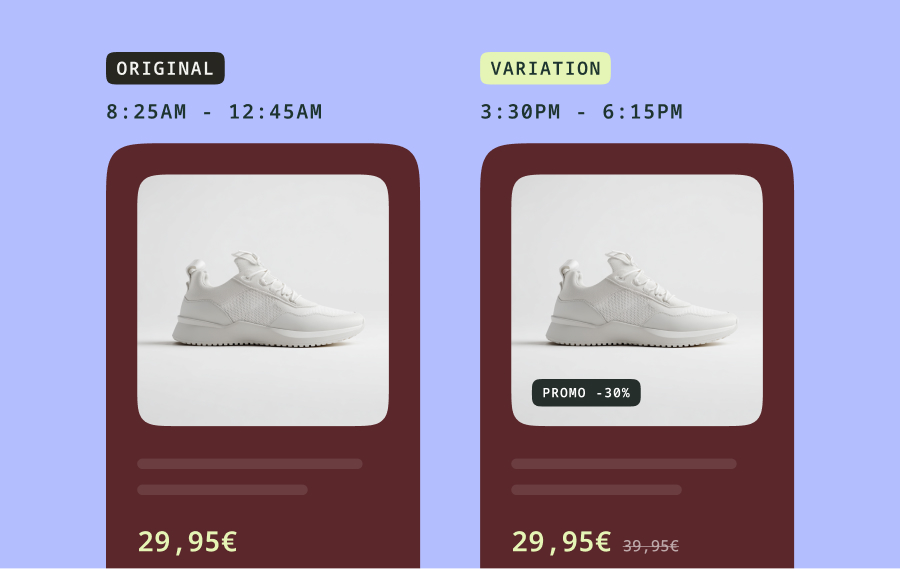

You should validate engineering work via experiments to avoid exposing 100% of users to a change that can potentially damage your business.

,

Shipping features fast without proving they work is a massive problem in engineering, thanks to lofty goals or the need for speed. Imagine you release an update that inadvertently disrupts your prospects' ability to sign up. Now, you're stuck rolling back code and figuring out how many users are stuck with the faulty version. This process wastes valuable time and resources, not to mention the potential customers you've lost in the meantime. It's a clear reminder of the importance of balancing speed with thorough testing.

Eddie Aguilar

Founder of Blazing Growth

,

And you have two choices: you can either prove the value of your idea first or throw tons of resources at a concept that might result in a net negative.

In addition to reducing risk to your business, validating engineering work via experiments helps to prevent wasting engineering resources. By just signing up for an experimentation tool and hypothesizing a few changes, you can make a positive impact on your bottom line.

And, by testing a proposed change on a sole subset of users, you can mitigate any negative effects

By testing a proposed change on a small group of users first, you can minimize any potential negative impacts on revenue. Plus, experimenting helps you figure out what works and what doesn't, increasing your chances of success.

How can I estimate the value a winning test would generate once shipped?

Whether negative or positive, all tests have value. Each test provides key details about a proposed change or feature's impact on the business.

For example, we can measure how a price change might affect business revenue and profit during the experiment. Then, extend this figure to give a longer-term estimate of its impact. What we’re striving for here is to rationalize the unknown.

From my perspective, I like to estimate the value of a winning test in terms of the ‘value of now”’ versus ‘value of later.’ While value of now refers to metrics like Marketing Qualified Leads (MQLs), Sales Qualified Leads (SQLs), conversions, and sales, value of later refers to metrics like net revenue, LifeTime Value (LTV), Customer Acquisition Costs (CAC), and retention.

Determining the average value of each depends on your business, but one of the most important is LTV. Hubspot considers LTV to be calculated as (Customer Value x Average Customer Lifespan).

I like to add an extra variable to the LTV calculation, called Average Time Spent. Average Time Spent is how long, on average, it takes your business to close a deal. Or, from an engineering perspective, how long it takes to test, build, and get a feature into production.

Adding this to the LTV formula provides a more accurate figure you can measure your experimentation efforts against.

The calculation I use for LTV is;

,

LTV = (Customer Value x Average Customer Lifespan)/Average Time Spent

,

I’ll give you an example. Imagine that your demo closing rate is 60%. You hypothesize that adding a “Get Demo Now” call to action (CTA) to your website should increase this close rate. You proceed to spend 40 hours adding these CTAs, but find there’s not a lot of impact.

Digging deeper, you notice your business receives a lot of phone calls. This leads you to the realization that your audience prefers to speak to someone on the phone.

You hypothesize that changing your call script to drive more demos should help you close more sales. You increase the number of demos but spend 15 hours changing the call script for a few agents.

Use this "Average Time Spent" to measure Lifetime Value (LTV) and better evaluate the experiment's outcomes. Experiments that take months to decide usually have little impact because by the time you see results, you've already spent too much time. This is how quick experiments drive results faster.

What types of engineering work can be validated with experimentation?

Engineering teams can validate their backend code with A/B testing. Imagine you're a business about to undergo an IPO. Your executives want to ensure your site is optimized to accept new sources of traffic.

Your team decides rebuilding the backend infrastructure to be current and up-to-date is the best course of action rather than waste time addressing tech debt.

The estimated time frame for tech debt fixes is four to nine months, while the estimated time for a backend refresh is three.

In situations like this, a backend refresh seems more reasonable. But how do you ensure that it doesn’t impact your bottom line?

This is where solutions like Kameleoon come in handy. Kameleoon can work alongside solutions like Cloudflare and handle redirects between the two backends on a DNS level. This ensures users who enter your experiment never see a “flash” or redirect, while you test your new code or CMS.

This type of testing ensures your new backend or server-side code is as effective as before. It also reduces the risk of problems if 100% of your traffic encounters issues with the new server-side stack.

Which team should be conducting experiments to validate future engineering work?

If your goal is sales or growth, marketing should be prioritizing experimentation. Typically marketing moves fast, and so does the audience you’re trying to sell to. When marketing owns experimentation, you can ship more production-ready changes or features using fewer engineering resources.

But marketing-led experimentation needs to be run by someone who understands the value, process required to analyze results, and the technical aspects of experimentation.

The concept of marketing-led experimentation also requires dedicated engineering resources. This includes an engineer who owns the experiment rollouts and data being used on the website. Plus, an experiment engineer should be available who knows how to manipulate the website to serve experiments. Once experiments are validated, the engineering team can build them into the website or platform.

How can experimentation teams grow revenue faster?

Spending too much time debating the details of each test, like its appearance or wording, will only slow you down. The quicker you learn, the easier it will be to adapt to subsequent changes. Never stop learning or iterating.

,

Don’t waste six months trying to get a concept rolling. The businesses and teams I've seen growing revenue faster are those willing to move mountains to get experiments launched.

Eddie Aguilar

Founder of Blazing Growth

,

When I’m asked to help a company expand its testing capabilities, one of my first objectives is to get all teams and departments communicating with each other. For things to progress fast and mountains to be moved, there needs to be some form of collaboration between business teams. Many of the tools each team uses are also separate so they often need a business like Blazing Growth to help personalize connections and connect team tools and data together.